Setting up Airflow for ETL development

Reading Time:

Reading Time:

This is part of a larger series of development and setup steps to build a self-contained Medical Record Database using ETL and other technologies. While a lot of what I do here can easily be scripted with pure Python, I am always exploring open-source ways to do things in a structured manner. I hope you find the content useful.

Apache Airflow is a powerful platform used to programmatically author, schedule, and monitor workflows. It is particularly popular in the data engineering and data science communities for orchestrating complex data workflows, including data ingestion and transformation tasks, such as running dbt (data build tool) models. Airflow allows users to define workflows as code, making it easy to manage, version, and share workflows across teams.

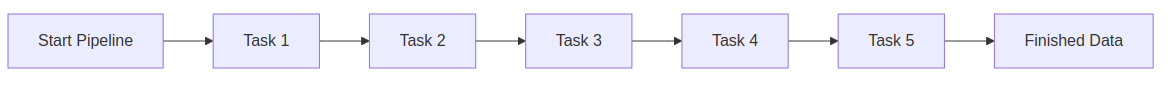

At the core of Apache Airflow is the concept of Directed Acyclic Graphs (DAGs). A DAG is a collection of all the tasks you want to run, organized in a way that reflects their relationships and dependencies. Each node in the DAG represents a task, and the edges define the order in which tasks should be executed. DAGs ensure that tasks are executed in a specific sequence without any cycles, which means a task cannot depend on itself, either directly or indirectly.

Apache Airflow is designed to be extensible and integrates seamlessly with a wide range of data tools and services. It supports various operators and hooks that allow you to interact with databases, cloud services, and other data processing tools. This makes it easy to create workflows that involve multiple systems, such as extracting data from a database, transforming it using a tool like dbt, and loading it into a data warehouse.

Airflow provides a rich web-based user interface that allows users to monitor and manage workflows. The UI provides a clear view of the DAGs, their status, and the logs of each task. Users can trigger tasks manually, view task dependencies, and even retry failed tasks directly from the interface. This makes it easy to keep track of complex workflows and quickly identify and resolve issues.

Docker-Compose is particularly useful for setting up Apache Airflow in a development or testing environment, as it allows you to define all the necessary services in a single configuration file and start them with a single command. We will download the official download image, edit it, create a dockerfile, and add some plugins, with one simple bash script.

#!/bin/bash

# Define custom ports

WEB_SERVER_PORT=8081

FLOWER_PORT=5556

# Create necessary directories

mkdir -p ./dags ./logs ./plugins

# Set environment variable for Airflow user

echo -e "AIRFLOW_UID=$(id -u)" > .env

# Download the docker-compose.yaml file

curl -LfO 'https://airflow.apache.org/docs/apache-airflow/2.10.2/docker-compose.yaml'

# Change permissions to make the file writable

chmod u+w docker-compose.yaml

# Modify the docker-compose.yaml file to use custom ports

sed -i "s/8080:8080/${WEB_SERVER_PORT}:8080/g" docker-compose.yaml

sed -i "s/5555:5555/${FLOWER_PORT}:5555/g" docker-compose.yaml

# Uncomment the build line and remove the image line

sed -i '/^\s*image:.*apache\/airflow/d' docker-compose.yaml

sed -i 's/^\s*# build: \./ build: ./' docker-compose.yaml

# Verify the modification

if grep -q '^\s*build: \.$' docker-compose.yaml; then

echo "Successfully updated docker-compose.yaml with build context."

sleep 5

else

echo "Failed to update docker-compose.yaml with build context." >&2

exit 1

fi

# Create a Dockerfile to install dbt and plugins

cat <<EOF > Dockerfile

FROM apache/airflow:2.10.2

# Install dbt

RUN pip install dbt-core dbt-postgres

# Install Airflow plugins

RUN pip install apache-airflow-providers-slack

RUN pip install apache-airflow-providers-amazon

RUN pip install apache-airflow-providers-google

RUN pip install apache-airflow-providers-postgres

# Switch to the airflow user

USER airflow

EOF

# Build the custom Docker image

docker-compose build

# Initialize the Airflow database

docker-compose up airflow-init

# Start Airflow services in detached mode

docker-compose up -d

# Output the access information

echo "Airflow is running on http://localhost:${WEB_SERVER_PORT}"

echo "Flower is running on http://localhost:${FLOWER_PORT}"In essence, the script sets env variables and modifies the incoming docker-compose file, and executes. I have dbt installed along with some other airflow plugins orchestrated in the created Dockerfile, which you can modify to your preferences. I'll share this bash script with this gist, make sure if your environment is Linux, you 'chmod +x <gist_file>' to make it executable. It goes without saying to have docker and docker-compose installed on your system. Quick point--you can set your port variables which can be needed if you are running a lot of docker-containers. I have many different containers, and I don't want the ports on my host machine conflicting with each other.

When setting up Apache Airflow using Docker-Compose, several services are typically included:

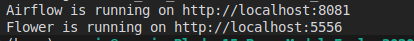

Once you execute the script and there are no errors the echo statements have something like this:

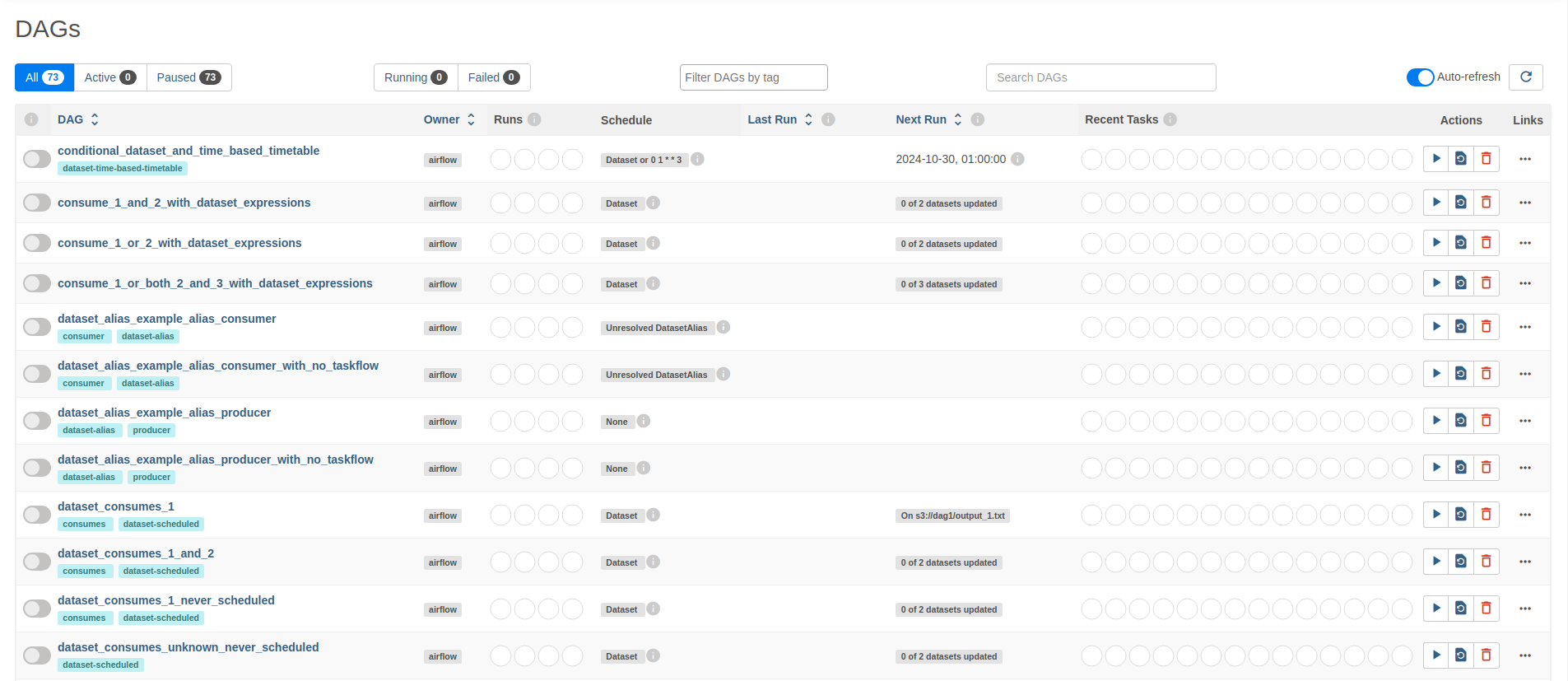

You can now navigate to localhost:8081 and view this:

You will see a lot of example dags.

In this tutorial, we explored how to set up Apache Airflow. By leveraging Airflow's powerful workflow orchestration capabilities, you can manage complex data processes more effectively and efficiently. We covered the essential features of Airflow, including Directed Acyclic Graphs (DAGs), integration with various data tools, and the intuitive web interface for monitoring workflows.

Additionally, we walked through the process of using Docker-Compose to simplify the environment setup, ensuring that all necessary services are configured and running seamlessly. With the provided bash script, you can easily customize your Airflow instance to fit your specific needs, including adding plugins and managing port configurations to prevent conflicts.

Now that you have a solid foundation for using Apache Airflow, you can begin to implement it in your own data projects. Whether you're automating data ingestion, transformation, or analysis, Airflow provides a structured and scalable solution that can grow with your needs. I hope this tutorial has equipped you with the knowledge and tools to harness the full potential of Apache Airflow in your workflows.

Hey everyone, let's keep teaching and learning!